In mid July 2024, OpenAI released a new model – named GPT-4o Mini.

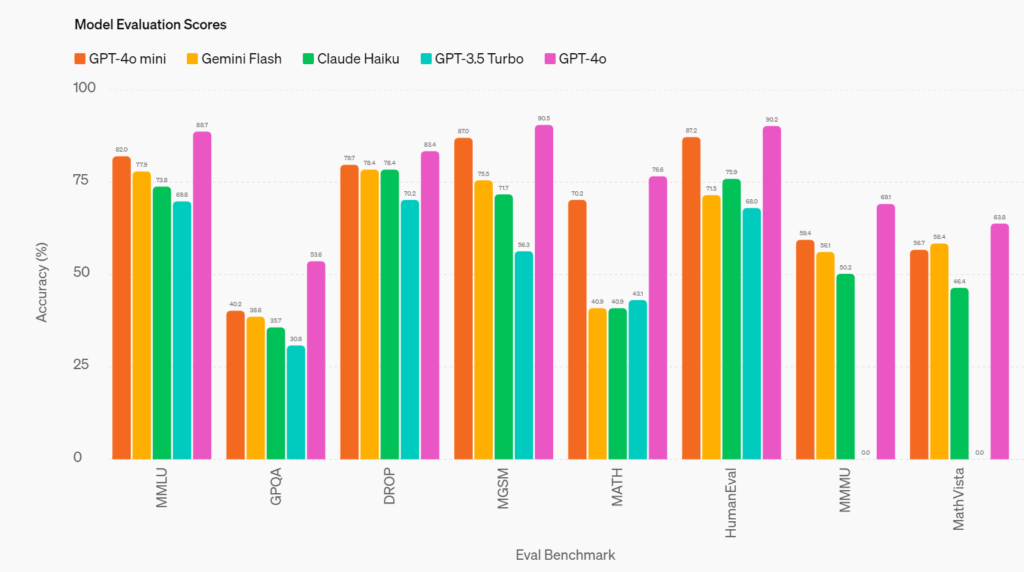

GPT-4o Mini was touted as a cheaper, faster model that was just as good, and probably better than the previous model GPT-3.5 Turbo.

So I thought I’d give it a go and see if it was indeed cheaper while giving same or better results.

I have an AI enabled Foal Name Generator that has generated over 4 million names for horse owners all over the world.

The usage of AI is fairly straightforward in the scheme of things, the form asks you for the attributes of your new foal and then it crafts a prompt for the ChatGPT AI to produce 20 names.

Changing to the new model was easy – you just adjust the model name in the openai library.

import openai

open_ai_model = "gpt-4o-mini" # Changed from 'gpt-3.5-turbo'

prompt = "Some prompt..."

response = openai.ChatCompletion.create(

model=open_ai_model,

messages=[{"role": "user", "content": prompt}],

temperature=1,

max_tokens=150

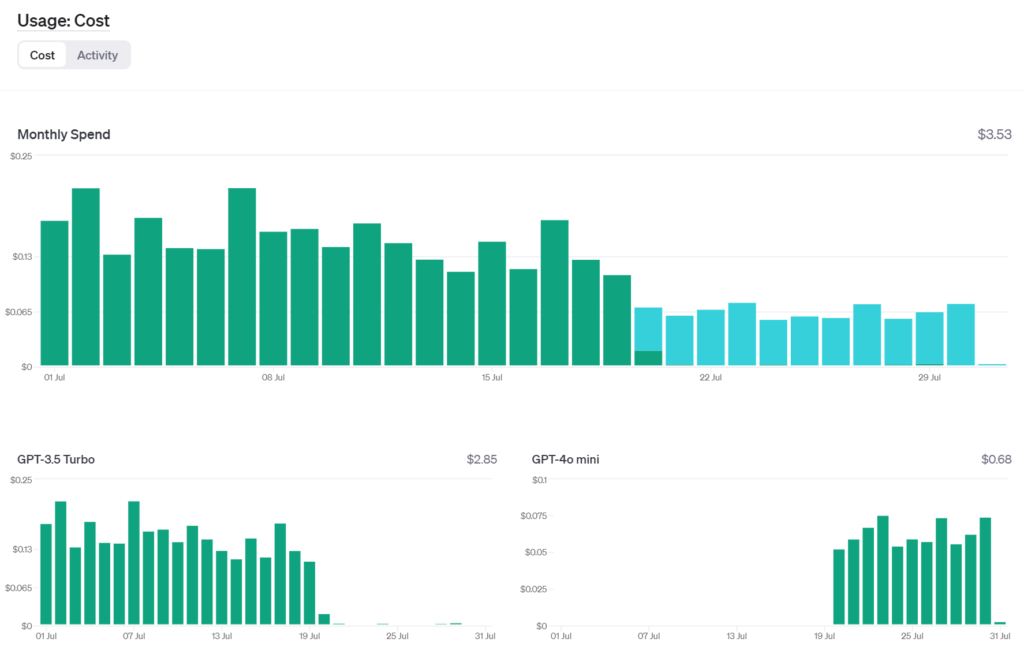

)And here are the results – the light blue colour is GPT-4o Mini:

Usage is in a different graph but it remained consistent throughout the month.

As you can see my costs have halved – with no degradation in results. Names are generated just fine.

So all in all – using GPT-4o Mini has been a net gain and for many use cases a good change.

Check it out yourself: Foal Name Generator