Do you love ChatGPT but don’t want your information being sent to the USA?

Do you like Deepseek but don’t want you information going to China?

Well – the solution is to host models like these locally! Right on your laptop or desktop!

There are a number of tools that do this for you, but the one I’ve found easiest to use is LM Studio.

Why Choose LM Studio?

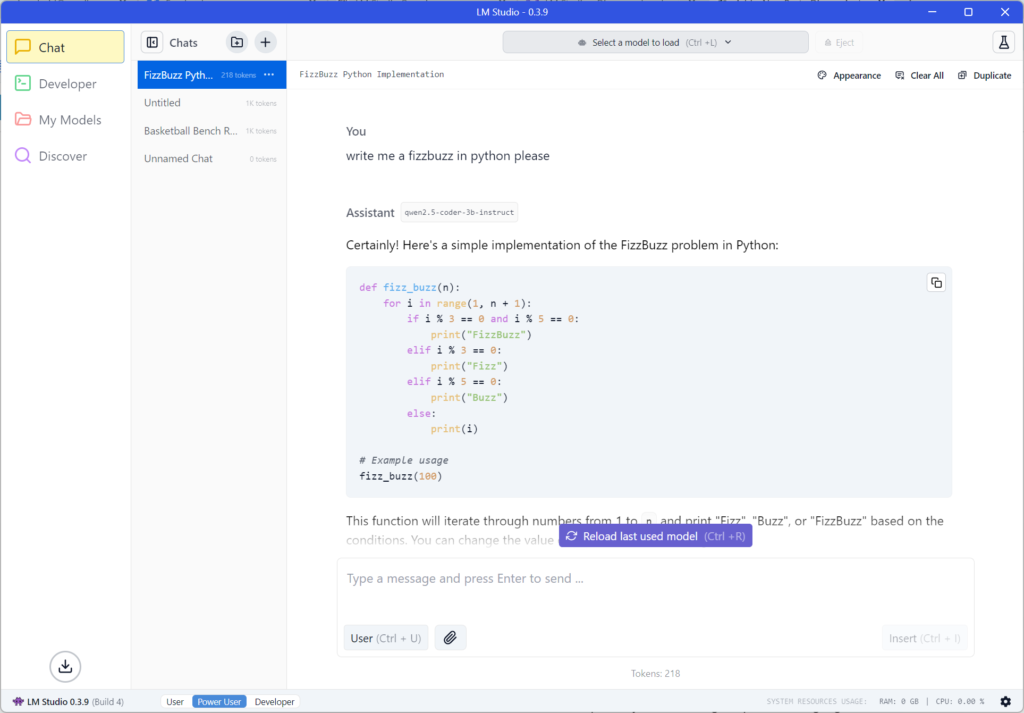

LM Studio simplifies the process of deploying LLMs locally. It presents as an easy to use, modern application. It is what you expect.

Upon installation, it provides immediate access to a default model, allowing users to experience its capabilities without delay.

For those interested in exploring alternative models, the ‘Discover’ feature offers a curated selection.

Models like DeepSeek can be easily downloaded and integrated, tailoring the experience to specific needs.

Benefits of Running LLMs Locally

- Data Privacy: By processing data on your local machine, you eliminate the risks associated with transmitting sensitive information to external servers.

- Performance: Local deployment reduces latency, ensuring faster response times compared to cloud-based counterparts.

- Control: With local models, you have full control over updates, configurations, and customizations, allowing for a more personalized experience.

- No internet needed: After the models is installed you can use these tools without needing the internet.

Getting Started with LM Studio

- Installation: Begin by downloading the LM Studio installer compatible with your operating system from the official website.

- Initial Setup: Launch the application and follow the on-screen instructions to set up the default model.

- Exploring Models: Navigate to the ‘Discover’ section to browse and select models like DeepSeek. Click ‘Download’ to integrate them into your local environment.

- Deployment: Once downloaded, select your preferred model and start interacting with it directly on your machine.

LM Studio screenshot. Yes it looks a lot like ChatGPT!

What models to install?

I personally have a couple of models installed:

Deepseek: DeepSeek-R1-Distill-Llama-8B-GGUF/DeepSeek-R1-Distill-Llama-8B-Q4_K_M.gguf`

Deepseek is a very good model that gives you the reasoning for what it is doing. Unfortunately it is a bit slow but if you need a good reason of how your model came up with the idea – Deppseek is for you.

Qwen: Qwen2.5-Coder-3B-Instruct-GGUF/qwen2.5-coder-3b-instruct-q4_0.gguf

I code as a job – so having a coding model is great.

I also think the LLAMA models are pretty good. They are good to install if you want a ChatGPT like experience.

As time goes by I will no doubt upgrade these models.

Conclusion

By hosting LLMs locally, you gain the dual advantages of enhanced performance and stringent data control.

Whether you’re a developer, researcher, or enthusiast, LM Studio offers a seamless pathway to harnessing the power of language models without compromising on privacy.

A video from the LM Studio folks: